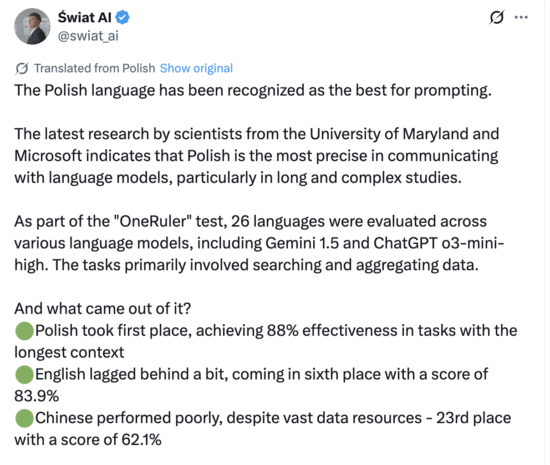

According to the latest tests, the Polish language has claimed the top spot in a major international study on how large language models handle extensive contextual prompts. According to scientists, the Polish language, which is considered one of the most difficult in the world, has surprised them with its performance in the context of artificial intelligence.

The research, conducted by scientists at the University of Maryland and Microsoft, examined 26 languages and several advanced models, including those from OpenAI and Google. According to the report: “Experiments with open and closed LLM models reveal a growing performance gap between low-resource and high-resource languages as the context length increases from 8,000 to 128,000 tokens.”

What makes the Polish result even more remarkable is that the language still has comparatively fewer training resources in the AI world. The results of the analysis show that the Polish language, despite having relatively small amounts of data for training AI models, may be more effective in handling long and complex commands than languages with larger numbers of users.

The full ranking of the efficiency of languages tested by the AI scientists at the University of Maryland stands as follows:

- Polish – 88 %

- French – 87 %

- Italian – 86 %

- Spanish – 85 %

- Russian – 84 %

- English – 83.9 %

This outcome raises important implications. For one, it challenges the assumption that languages with the largest datasets and the broadest global use necessarily yield the best performance in AI tasks. English, despite dominating AI research and data, was only sixth.

Moreover, the findings suggest that smaller or less-resourced languages can outperform dominant ones under certain conditions. This has a particular bearing for Poland’s tech sector: the results could act as a catalyst for increased investment in AI development locally.

From a broader perspective, these findings touch on fairness and diversity in AI. If a language like Polish can top performance in long-context tasks, it prompts the question: what other languages might be undervalued in AI research?

For researchers, developers, and policy-makers in Poland and beyond, the message is clear: invest in language diversity, consider context-rich tasks, and recognise that dominance in data does not always translate to dominance in performance.

Source: Business Insider

Photo: HajaneChan86042/X

Tomasz Modrzejewski